Remote sensing has enabled the production of up-to-date crop type maps to support food security, management, and research. However, crop types maps remain largely limited to regions where ground truth labels are available to train models. many cases, optical features do not transfer well across geographies, as models trained in one area often perform poorly when applied elsewhere. While recent work shows that combining multiple sensors (e.g., optical, SAR, LiDAR) can improve transferability and generalization (e.g., see Adrah et al. 2025 and Di Tommaso et al. 2021), the challenge of label-scarcity for crop-type classification remains. Moreover, the transfer potential of newer satellite-derived embeddings has not yet been widely and systematically evaluated.

Recently, AlphaEarth Embedings, developed by Google DeepMind, was released. It produces 64-dimensional embedding vectors for every 10 × 10 m pixel globally by fusing vast amounts of Earth-observation data, including optical, radar, LiDAR, climate, and other auxiliary sources. See the AlphaEarth paper here, and the data here.

This embedding product has drawn considerable attention for its potential to enable, scale, and accelerate diverse geospatial workflows. Tasks spanned land cover classification, ecosystem mapping, and environmental monitoring. For example, in the blog here, you can check a few use cases of satellite embeddings and other interesting ones covering supervised and unsupervised learning in this multi-part tutorial here by Spatialthoughts, along with a deep dive video by Ujaval Gandhi.

In this article, I take an experimental approach to assess how well crop-type classification models generalize geographically when built on AlphaEarth embeddings, and I leave out the discussion of the hype and challenges of embeddings, which I discussed in depth in this article. Here, I focus on feasibility and robustness rather than merely highlighting the hype, though I acknowledge that many other foundation models may soon emerge. For now, I use AlphaEarth because its embeddings are readily accessible, well-documented, and widely discussed. I also made all codes and data used in these experiments publicly available and linked where relevant.

The Question

Can satellite embeddings improve the generalization of crop-type classification models across space and time? In other words: How well do embedding-based features transfer across different geographies or different years?

Experimental Design

To test this, I conducted a series of simple supervised crop-type classification experiments inspired by the approaches of Di Tommaso et al 2021 and my previous work (Adrah et al 2025), assessing transferability and generalization of GEDI-derived features. In addition to spatial transfer, I also evaluated temporal transfer.

The experiments focus on four globally significant crops: wheat, rice, corn, and barley. Input features: 64-dimensional AlphaEarth Satellite Embeddings

Class labels: US crop data layer (CDL), Register Parcellaire Graphique (RPG), or Both. Classifier: Random Forest

Summary of Crop Classification Experiments

This table details the setup, training/testing data, and comparison metrics for the satellite embedding experiments and the Sentinel-2 baseline. All experiments used random forest in GEE.

| Experiment | Type of Generalization | Training Data (Source & Sample) | Testing Data (Source & Sample) | Year Reported | Reported Metric |

| a | Geographic Transfer (US to France) | Random sample from CDL (US) | RPG (France) | 2020 | F-score |

| b | Geographic Transfer (France to US) | Random sample from RPG (France) | CDL (US) | 2020 | F-score |

| c | Spatial Generalization (Joint) | Random samples from both CDL (US) and RPG (France) | Independent samples from CDL (US) and RPG (France) | 2020 | F-score |

| d | Temporal Generalization (Within-US) | CDL samples from all years (2000–2024) | CDL for each year (2000–2024) | 2000–2024 | Average F-score |

| Sentinel-2 Baseline (a & b) | Comparison baseline for a & b | Uses same Train/Test data as Exp. a & b | Uses same Train/Test data as Exp. a & b | 2020 | F-score |

Results

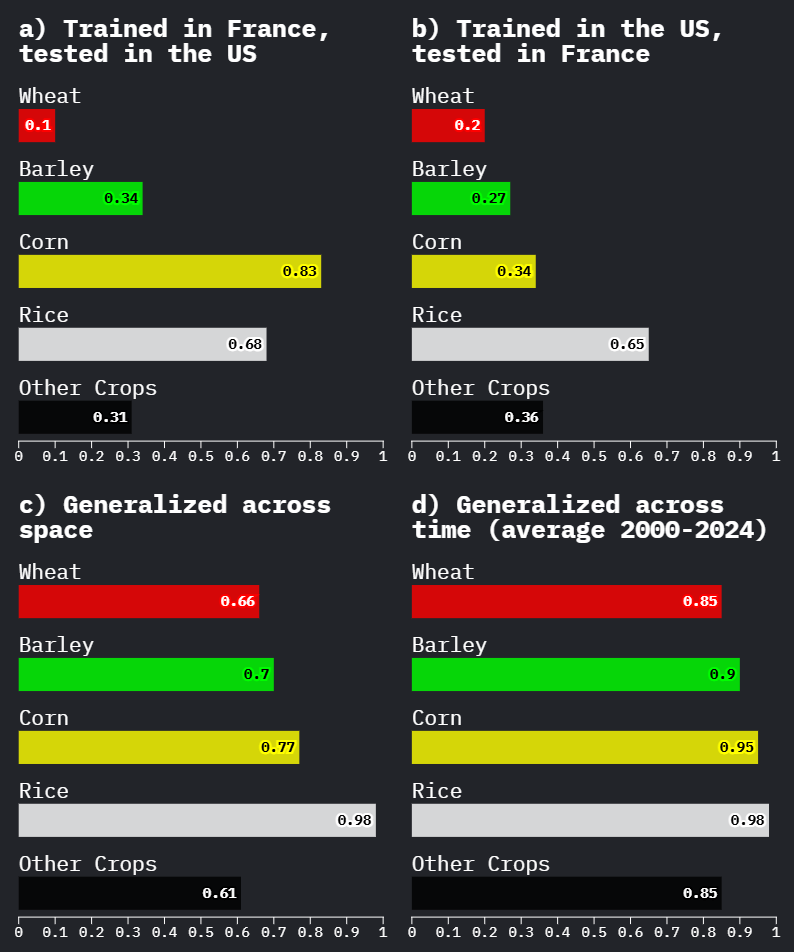

Overall, the embedding features generalize surprisingly well, particularly for the space generalization (c) and time generalization (d) experiments.

However, one of the most striking findings is the poor transferability for wheat and barley in the two geographic transfer settings (a and b). This happens despite strong performance on other crops.

My hypothesis is: this has something to do with timing i.e. the alignment between the annual compression of the embeddings and the wheat season. But first let’s see how does this result compares to Sentinel 2 features.

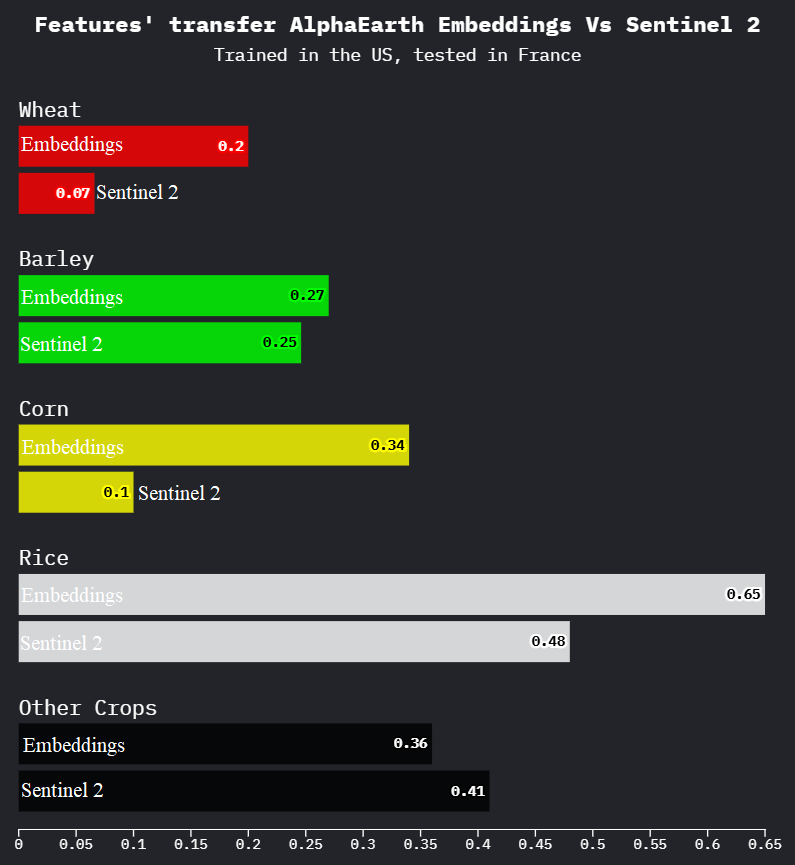

Comparison with Sentinel-2

The f-score using embedding features is higher compared to using Sentinel-2 features across, almost, all experiments.

However, using Sentinel-2 for comparison in this setting should not be interpreted as a definitive benchmark of which feature set transfers “better.” A proper Sentinel-2 generalization study would require: phenology-aware sampling, multiple temporal composites (percentiles, seasonal windows), and more spectral indices. For more on Sentinel-2 transfer, see this recent interesting piece by Tong and Wang 2025 et.al.

Interestingly, the poor performance of Sentinel-2 features for geographic transfer for wheat and barley, indicating that the issue is not unique to AlphaEarth embeddings, and supporting my hypothesis that temporal alignment might be the issue.

Interpretation

The poor performance for wheat and barley in the geographic transfer experiments is likely due to mismatched agricultural calendars.

A “2020” embedding vector (Jan–Dec composite) captures two winter-wheat cycles in some regions (Sept–June). This creates two distinct issues for classification:

1. Missing Data: The annual embedding excludes the early phenological stages from Sept–Dec 2019, which are critical for distinguishing winter wheat. This missing information directly limits model performance.

2. Adding Noise: The annual embedding may also include signals from the start of the next cycle. Even if the embedding captures temporal patterns internally, a simple downstream model like a Random Forest cannot reliably decode these compressed, overlapping cues. To the classifier, this manifests as temporal blurring that obscures key phenological distinctions.

To test whether the issue is actually tied to the temporal window, I reran the Sentinel-2 baseline using a crop-specific wheat calendar composite, rather than the calendar year.

Result: The wheat F-score more than doubled confirming that temporal aggregation is a major factor in model transferability.

I leave deeper interpretation to the reader, but here is my main takeaway:

Embeddings are powerful, but temporal alignment matters.

I dive into this temporal alignment issue in creating embeddings along discussing challenges and opportunities in my article : Satellite Embeddings in Geospatial Workflows: Navigating the Challenges and the Excitement.

#GeoAI #EarthObservation #EarthAI #GeoEmbeddings #AI4EO #GEOINT #PhysicalAI #RemoteSensing

Leave a comment